Protecting wildlife with machine learning

Deploying a smart camera trap in a remote location to help save elephants and rhinos

At Hack The Planet, the tech for good venture of Dutch digital product studio Q42, we help solve (global) challenges using pragmatic technology. Doing so brings us in contact with many different people, countries and issues, like protecting elephants and rhinos in national parks with smart solutions.

In 2018, we were working on a prototype of our anti-poaching concept in Zambia. From the local rangers we heard how they use camera traps in the wild. These traps are widely used in conservation for different purposes, ranging from biodiversity monitoring to anti-poaching.

These cameras are pretty cool for what they do, but they are not very smart. Rangers often need to visit the camera’s to retrieve the photos because they are stored onto the device. Since these areas are huge, this takes a lot of time.

Now you might think an easy fix would be to use cameras attached to a GSM uplink. Unfortunately, in Zambia mobile phone coverage is flaky or absent all together.

So how to go about this…..

Machine learning to the rescue

What if camera traps would know what is in the photo and could communicate with rangers if something of particular interest was detected? This would mean that a ranger could react immediately if a poacher was caught on camera. That would be a major game changer!

But it doesn’t stop at anti-poaching. There are many areas in conservation where having a smart camera can provide invaluable information. For instance, human-elephant conflict, due to the expansion of human settlements and vanishing of elephant habitat, is becoming a big issue in many parts of the world. This leads to the loss of both human and elephant lives. A system that automatically identifies elephants, can function as an early warning system so that unfortunate meetings between humans/villages and elephants can be avoided. With the use of machine learning this is very well achievable. But the right model and data are key.

Wildlife conservation is a difficult field of expertise. Having a super smart machine learning solution on its own will not cut it. Any solution you’re ever going to invent needs to be applicable. In our case, this means that rangers and national park authorities need to be able to work with it. Resources are always scarce, deployment of hardware in the bush is tough and weather conditions are challenging to put it mildly.

With that in mind, we wanted to look at what’s already out there and how we could potentially upgrade that instead of starting from scratch.

To reinvent the wheel or not

It was very tempting to start from scratch and totally reinvent the camera trap. But we knew this would not be the best solution. For two reasons:

- The camera trap itself is very good in what it does. It’s optimised for taking pictures in the most challenging conditions. No need to improve this.

- There are already many camera traps in the field. Something that could be used as an add-on would save the money for buying additional camera traps.

We started working on a system that uses as much existing hardware as possible. We used ‘off the shelf’ camera traps and fitted them with a custom chip. Whenever these camera traps detect movement and capture a photo, the chip kicks in and sends a LoRa message to our Smart Bridge. The Bridge is placed high up in a tree and wakes up when it receives the LoRa message from the camera trap. Next, it retrieves the images from the camera trap and analyses the photo using a machine learning model. The results from the analysis are being relayed using a satellite uplink.

Because of the satellite uplink, this system can be deployed anywhere in the world without any dependency on GSM, WiFi or LoRa network being present. Sounds easy, but it was quite an effort to put it all together...

About machine learning

Recognising what's in a photo, is what's called an image classification task in the field of machine learning. Image classification is a well studied field: many examples, libraries and pre-trained models are available online. However, most of these examples stay in the prototyping phase. There is much less information on how to use these models in production. On top of that, we needed to go even one step further: how to use these models in production on battery powered hardware with limited resources, e.g. a Raspberry Pi.

Since we were no deep learning experts to begin with, we found a fantastic fast.ai course Practical Deep Learning for Coders and started playing with training models. We soon realised that this is a great platform that makes it really accessible to train good models, even with limited data! After deep-diving into the world of neural networks, it was time to try and really use them. This is where a big challenge came up.

As great as the fast.ai platform is for training and optimising models, we found much less information on how to actually use these models in an efficient way, especially trying to run it on a Raspberry Pi. It took quite some time and many apt-get and pip install attempts to get it up and running.

But, we finally managed to get it! The model we first tested with, was a pretty big resnet50 model, both in terms of file size (around 100mb) and in terms of the input layer size (576x768). It took around 14 seconds to load and inference a single image on a Raspberry Pi 4. This was not going to be a viable option in terms of inference time and battery consumption…

Different model, different problems

We needed to find a model that performs better. So we decided to do some performance tests with different neural networks architectures on the Raspberry Pi 4.

These results were already much better and gave lots of lots of insights on what neural network architectures could work for us. But before choosing one of the above fast.ai options, we also wanted to try TensorFlow.

Training a model in TensorFlow Lite

TensorFlow Lite has lots of ways and examples of running models on different edge devices, also for the Raspberry Pi. A TensorFlow Lite model is, as the name suggests, a 'lite' version of a machine learning model that's optimised for inferences on low-end devices. TensorFlow Lite models are quantised. This means a neural network that uses floating-point numbers, is approximated by a neural network of low bit width numbers, for instance using 8-bit integers instead of 32-bit floats. This dramatically reduces both the memory requirement and computational cost of using neural networks.

TensorFlow offers tools to convert your TensorFlow model to a TensorFlow Lite model, this is pretty straightforward and called post-training quantisation.

However, what we learned, is that training a good performing model is much harder with TensorFlow. Even some of the Google provided sample scripts to train models, yielded terrible results (even stating so in the comments…). Surely this is a case of “it’s me not you”. But we found it is so much harder to get decent results with manual TensorFlow training compared to the it-just-works experience we had with fast.ai.

If anyone has tips for training an image classification model in TensorFlow, please feel free to reach out. Your help is greatly appreciated! 😊

AutoML

Since we were kind of frustrated with manually training in TensorFlow, we decided to give Google’s AutoML a shot. With AutoML you upload your labelled dataset and AutoML does it’s magic for you. We analysed and compared the model with the fast.ai one. To our surprise, the AutoML model was really good! Even though it was trained on smaller images (224x224).

We downloaded the quantised TensorFlow Lite model and ran it on the Raspberry Pi. With inference times of only 100 milliseconds per image this was the obvious choice to use. Ladies and gentlemen, we have a winner! 🎉

To make sure we also had a model that performed well, we compared a fast.ai model with the AutoML model. Even though the fast.ai model was trained on larger images, the AutoML model was really close and sometimes even better than the fast.ai model.

How the system works

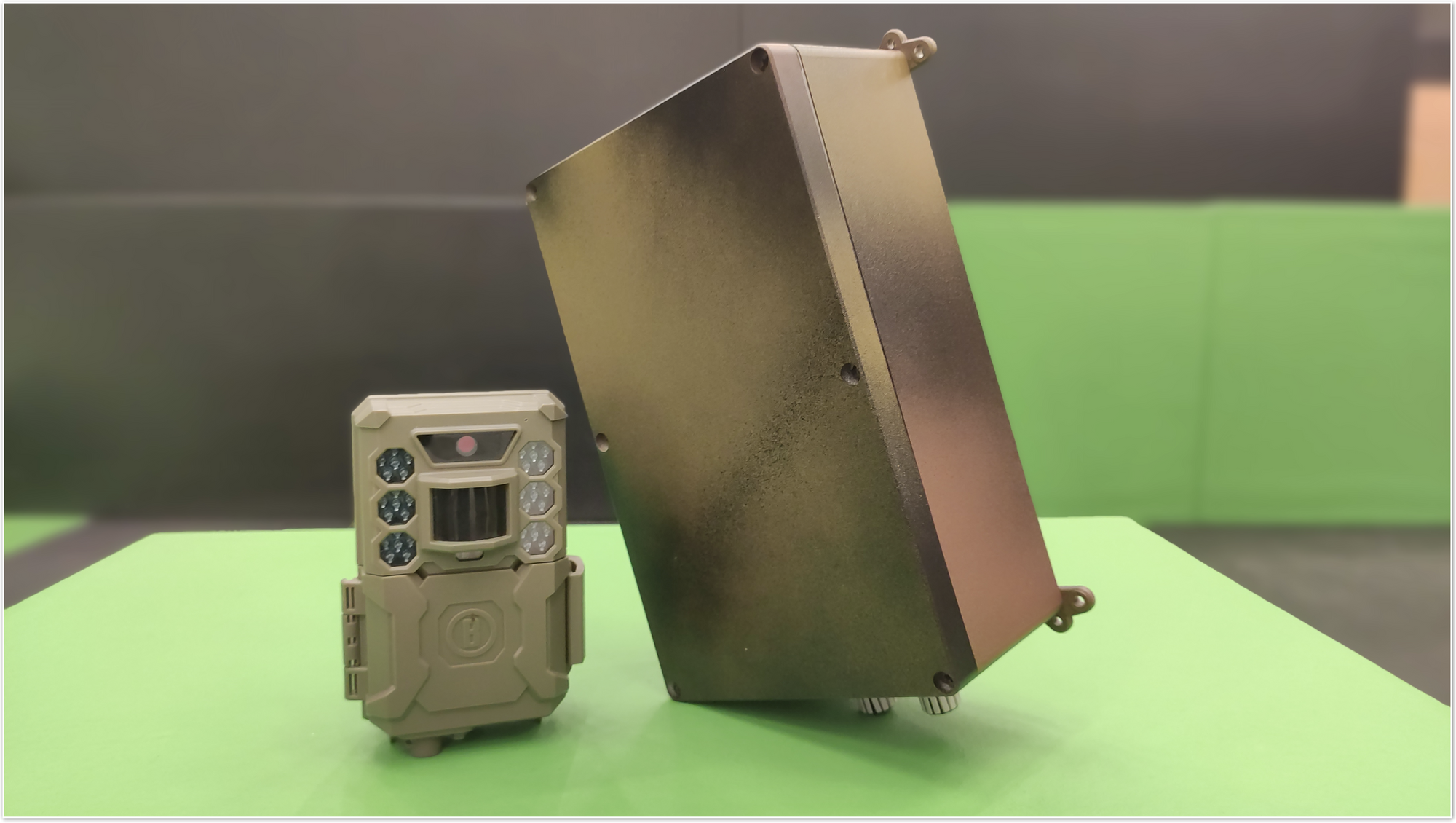

So, since we now had a working neural network, it was time to get the whole system up and running. The system consists of:

- a modified Bushnell camera trap fitted with a WiFi SDCard

- a Smart Bridge which consists of a custom board developed by our hardware partner Irnas, a Raspberry Pi and a RockBLOCK

- a solar panel to power the Smart Bridge

When the camera trap takes a picture, it sends a LoRa signal to the nearby Smart Bridge. Once it receives the LoRa message from the camera trap, it boots up the Raspberry Pi which connects to the WiFi SD card. It then retrieves and classifies the images using the TensorFlow Lite model and sends the result using the RockBLOCK over the Iridium satellite network. The data is received by our servers within minutes of the camera trap taking the picture.

Testing and results

It’s really scary if your code is running in a place where you can’t access or debug it. So aside from writing plenty of Unit Tests, we decided it was field testing time!

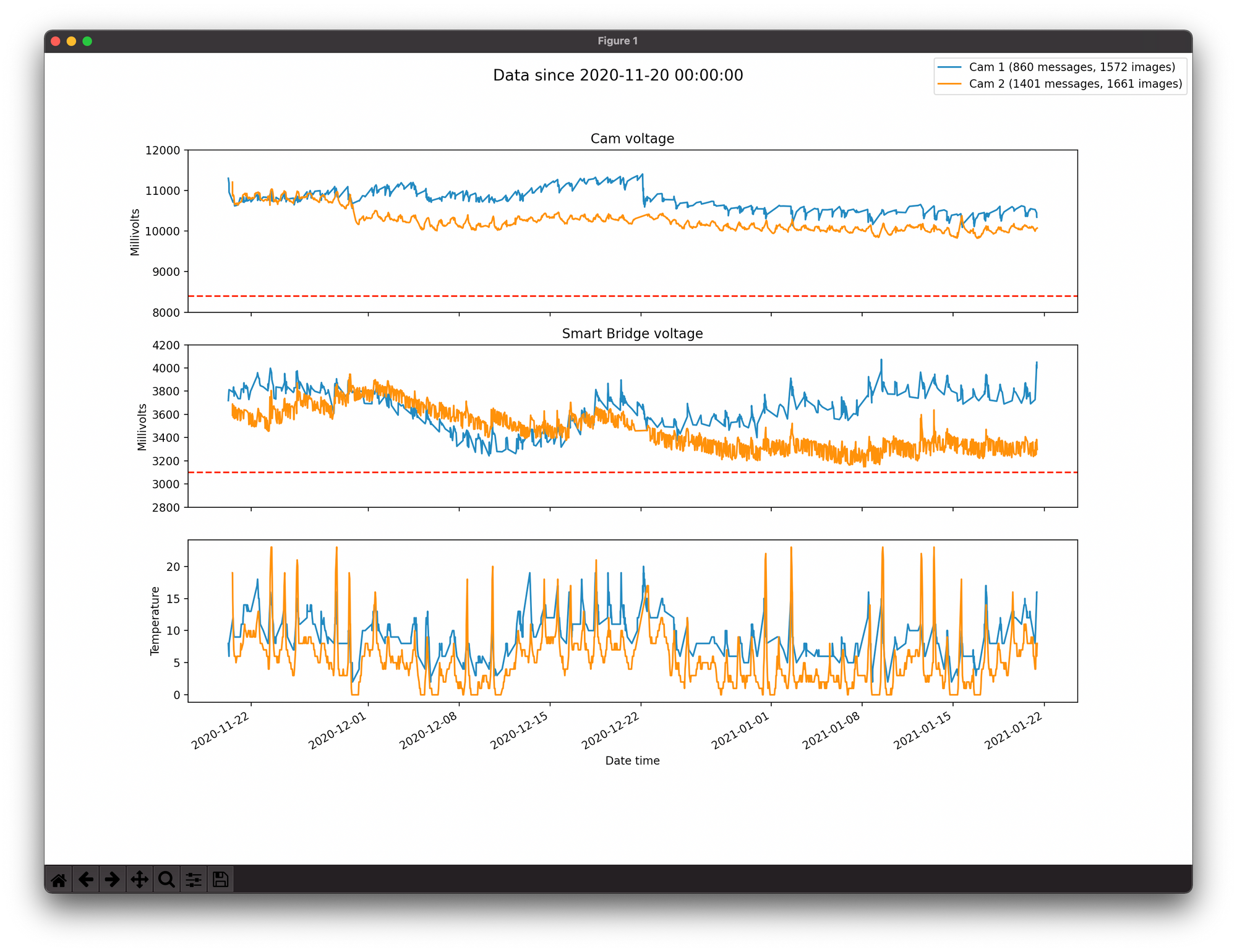

We placed one system in Thijs’ backyard taking pictures of wildlife aka his kids. 😉 We also set up one system on the rooftop of our office in timelapse mode. The system has been up and running for a couple of months already and everything is working perfectly. We see data from the camera traps in our backend and we can remotely monitor the battery life of the camera traps and Smart Bridges.

We see that the smart bridge nicely charges from the solar power (even in the cloudy Dutch weather conditions). We are confident that this will be able to run autonomously for a very long time.

The thing we are most interested in, is the battery life of the camera trap. Since we made some modifications, this will most surely impact the battery life of the Bushnell. So we are eager to find out how long it will last.

Seeing this data also made us realise another big advantage of our system: rangers can remotely monitor the hardware. This way they no longer have to guess when it's time to replace those batteries. 🔋

Now what?

In the world of conservation you often find yourself in situations where you need to work with what you’ve got. Many times, that’s not much!

We are very happy to achieve these results in such a short time and to see them produce results better than we could have hoped for. The system is now being stress-tested in the cold, rainy weather of the Netherlands and everything is still looking great! We have many conservation parties that have shown interest in the system, and we are planning to deploy the smart camera traps later this year in Asia and Africa. After that, we expect additional national parks to follow-up quickly and contribute to a decrease in poaching numbers.

Do you have any ideas or suggestions after reading about our smart camera trap? Please don’t hesitate to contact us: thijs@q42.nl and timvd@q42.nl. More info on our anti-poaching project can be found here: www.hackthepoacher.com. Check out Twitter and LinkedIn for updates on our project!

📣 We would like to thank Robbie Whytock from the University of Stirling and National Parks Agency of Gabon for providing the dataset for training the models and for collaborating on the system’s design.

🙌 We want to thank our friends from https://appsilon.com. They helped us in training the ML models. We learned a lot from them about deep learning!

Are you one of those engineers that love to sink their teeth into machine learning and use technology for the greater good? Then do check out our job vacancies (in Dutch) at https://werkenbij.q42.nl!