Making wildlife cameras smart with embedded AI

How diving head first into the unknown yielded a microcontroller that can recognise hippos

As a developer, it is generally good practice to think about how you are going to build something before you get going. But once in a while you’re presented with a challenge where you just have to dive in and figure it out as you go. In this blog post I’ll take you through how diving head first into the unknown yielded a microcontroller that can recognise hippos.

Upgrading our smart cameras

First a bit of context. At our tech for good venture Hack The Planet we have a fair bit of experience with developing and deploying wildlife detection systems for our clients in both Africa and Europe. These systems all contain a camera and a separate detection system, which determines what’s on the pictures taken by the camera. This detection system can be a cloud system or a Raspberry Pi mounted in a special enclosure up in a nearby tree. This split approach has worked quite well for us but it's not ideal. We cannot manufacture some versions of the system anymore as the specific off-the-shelf cameras we used are no longer for sale. The reaction speed of the current system is also fairly slow. Establishing a local wireless or cloud connection between the camera and the detection system and waiting for it to be ready to detect can easily take up to thirty seconds. This is too long if you want to do real-time detection in order to, for example, scare a bear away from a barn full of pigs. Despite these shortcomings, our detection systems have proven their worth in the field and demand for them is growing.

With the smart camera systems scaling up, the aforementioned issues also scale up. This prompted us to start developing our own, fully custom, smart camera system. This approach does have its own drawbacks, but it would make us independent from existing camera brands and allow us to implement all sorts of speed improvements and really tailor the system to our use cases.

We even got a client on board who wants to deploy our new camera system in a research project to see if it can be used to mitigate conflicts between humans and hippos. Now, I am no biologist, but to my understanding hippos are made up of about 5% fat, 50% muscle and the remaining 45% of their body mass is just pure condensed hatred for anything that moves. So it seemed like a sensible goal to see if we could keep hippos and humans away from each other.

For a few months now, my colleague Thijs has been spearheading the development of this new camera system. In this camera development process we’ve been working on multiple possible options. These options include developing our own camera platform from scratch and adopting the development of an existing smart camera platform. Regardless of what our camera in the field is going to be, it needs to be made smart. Ideally, we’d also have the smart part done by the time the camera system itself is ready. And that’s where I come in and what I will take you through in this blog post.

Designing our AI module

To give our camera its “AI smarts” we envisioned some sort of AI module that we could integrate into a camera.

We had some requirements for this module:

- Low power: the whole system needs to run for months on a single battery charge.

- Fast: detection results need to be ready in under a second.

- Universal: ideally we’d want this module to have some sort of generic interface so we can integrate it in various types of cameras.

- Cheap: the people that use our camera systems are conservation NGOs and researchers, so we want to keep the price as low as possible.

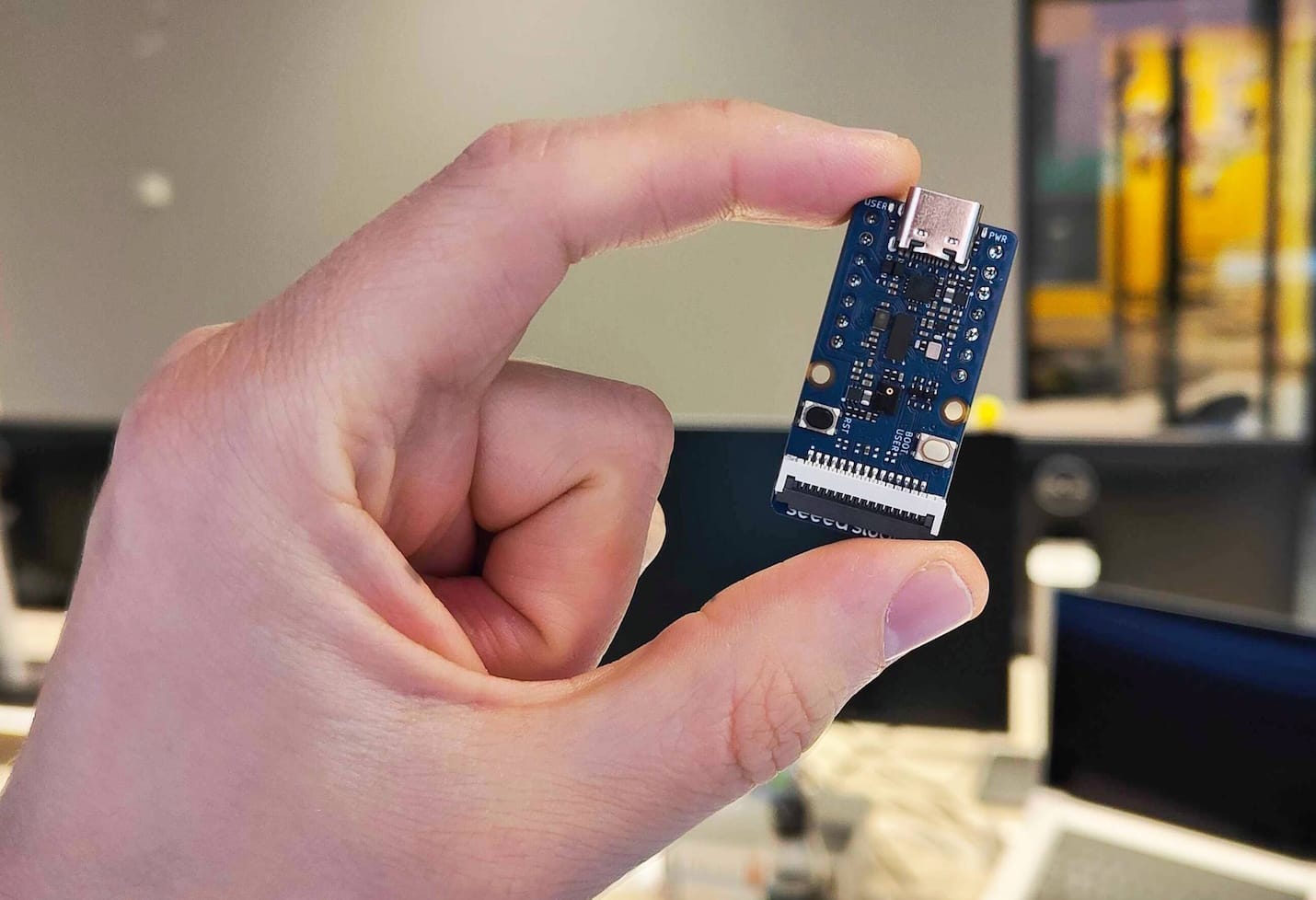

The power requirements meant that a full-on computer like a Raspberry Pi was out of the question. Microcontrollers, like Arduinos, are typically very low power, but don't have enough processing capacity to run computer vision models. Fortunately, over the last few years new microcontrollers have been brought to market that are optimised for AI computer vision. After evaluating a few options, we landed on the Seeed Studio Grove Vision AI module V2, which we’ve affectionately shortened to “the Grove”. This is a little microcontroller board which features the Himax WiseEye2, an ARM Cortex CPU with integrated NPU. An NPU is a Neural Processing Unit, a chip highly optimised for running neural networks like computer vision models.

The Grove ticked all our boxes. The included example applications showed great potential and we measured its power consumption at just 0,1 watts when running. This is about 1/40th the power draw of a Raspberry Pi 5 at idle. The only downside was that all of the Grove’s included example projects assume you’d want to use Seeed Studio’s own model training software and that you’d be running detection using a camera sensor directly connected to the Grove. We would have to figure out how to train our own models in such a way that they were compatible with the Grove. Besides a custom model, we also needed to get the Grove to allow us to (quickly) load-in an external image and get detection results for it.

Getting the Grove to do what we want it to do

Our use case did not deviate a whole lot from some of the included example projects for the Grove. So getting it to do what we wanted should be pretty simple right? There were two small issues. First off, the documentation for the Grove lists that a lot should be possible but contains very little to no information on how to actually use a lot of those features. The second issue: I am not an embedded developer.

These two factors combined made it so there was no real point in trying to design our custom firmware beforehand. Without knowing where exactly the finish line was, or how to get to it, I just had to dive in and submerge myself in the world of memory addresses, toolchains and header files and figure things out along the way.

Writing the firmware

My first goal was gaining a better understanding of how the Grove and its firmware worked. The Grove firmware is open source, but there are multiple (official) forks. I eventually settled on an example “app” in one of those forks. This was an app that used YOLOv8n object detection models to analyse an image feed coming from a connected camera sensor. Because the documentation was so sparse and scattered, it took me a good few days to figure out how to build the example firmware and flash it, along with a computer vision model, to the Grove. To keep the number of variables manageable, I opted to go for a ready-made computer vision model from Seeed Studio. Having a known good model would allow me to focus on just the firmware for now.

In most projects we do at digital product studio Q42, we can just use the debuggers in our IDE to troubleshoot and analyse the code we’re writing. When you’re writing code for an embedded platform like the Grove, that becomes a lot more difficult as the code is not running on your computer. Some microcontrollers allow you to connect some sort of debugging device to inspect the code that’s running on it. Unfortunately, the Grove is not one of them. This meant it was time for some good old console logging over serial. Once I had figured out how to crank up the Grove’s serial data rate I was able to tap into the detection functions and see what the data looked like going from the image sensor to the computer vision code. When I write it down like this, it all sounds fairly simple. But this was one of those processes where the actual amount of code produced is fairly low, but it takes loads of time to figure out how to write that little bit of code. To give an example, one thing that stumped me for a bit was that I could not explain why the image data I was getting back was so dark. Did I incorrectly assume a data type? Was there some sort of scaling that needed to be applied to the values? Nope. Turns out the camera sensor just needed a few captures to get its auto exposure dialed in, and I was always reading the first, dramatically under-exposed capture…

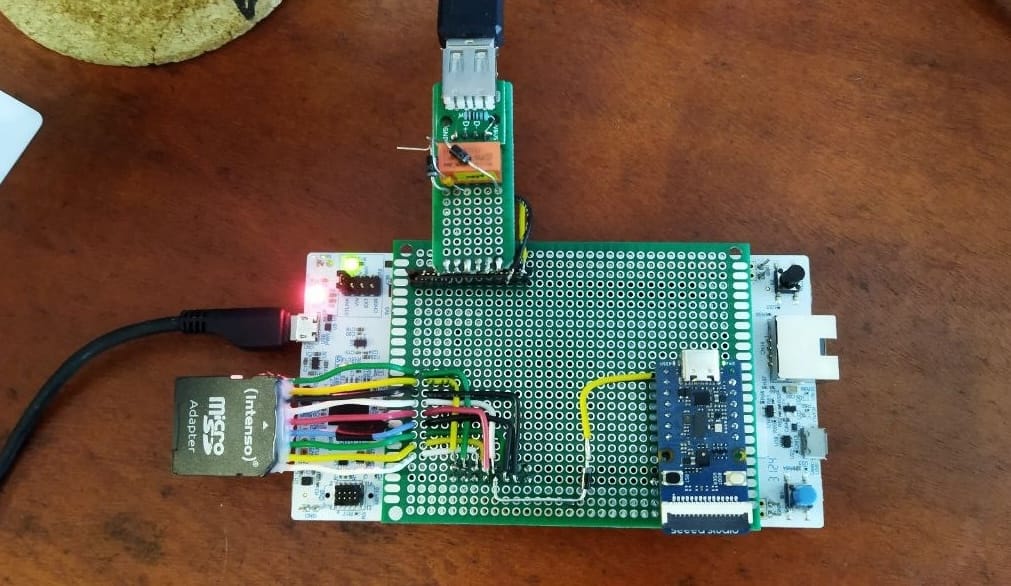

After I had taken a short break to let the “my god, I’m an idiot” feeling subside, it was time for the first milestone. I now had the two components I needed to send an image into the Grove: working serial communications and knowledge on what data the computer vision model functions expected. So I designed a very crude image transfer protocol and implemented it in the Grove firmware. To test it, I also wrote a Python script that would allow me to use my computer as the host device and send images to the Grove.

So after a few weeks of trying to wrap my head around embedded C/C++, I finally had a working prototype that showed we could send our own images into the Grove and get a result back. 🎉

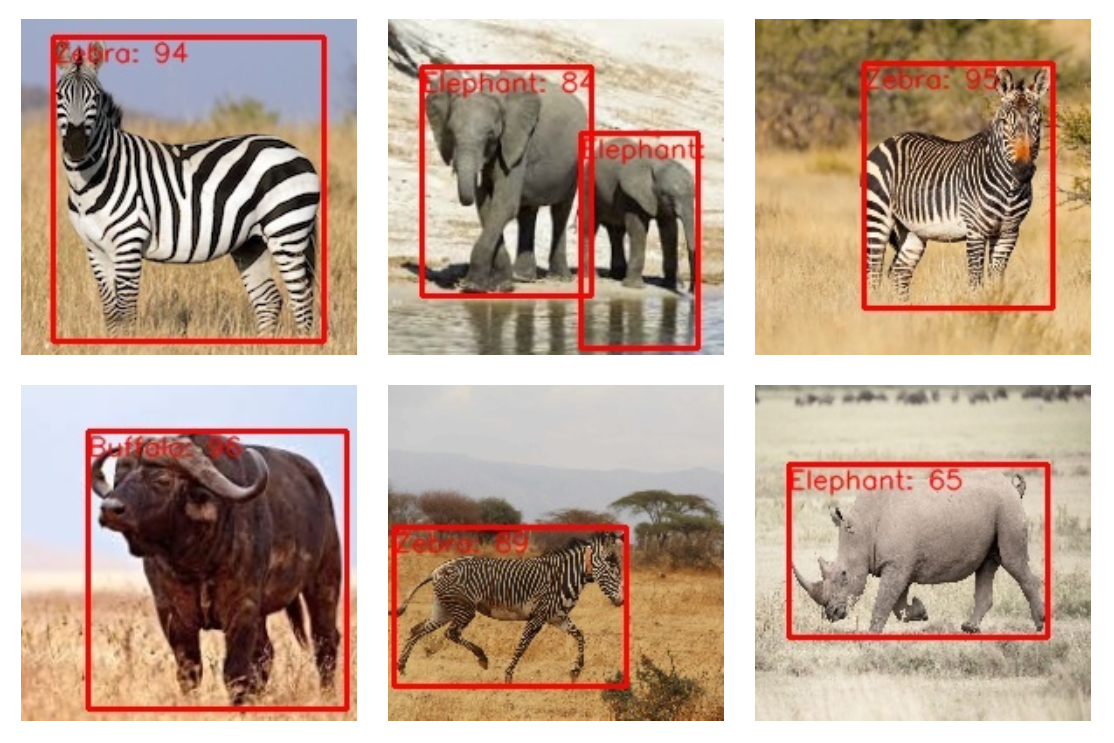

Getting the model ready

Since the firmware was now somewhat functional, I could shift my focus to the computer vision model. Just as with the firmware, figuring out how to create a model that was suitable for running on the Grove would be a process of puzzling together various bits of documentation and a whole lot of reverse engineering. Some of the example projects for the Grove used YOLOv8n - int8 models, so that’s where I started. These are small, but fairly performant, object detection models where all the weights of the model have been “reduced” to a limited range (int8) to save memory. To give an example, the YOLOv5 model we use in our cloud-connected bear detection system is about 12mb in size. The model I had been testing with on the Grove was only about 2mb. Besides being small enough to fit and run on the Grove, the model also had to be transformed in such a way that it could run on the Grove’s NPU, which has its own special instruction set. By the time I had pieced together which parameters were needed for the model and how it could be transformed, Thijs had obtained / created a dataset of hippo images with bounding boxes. He then used that dataset to train and transform a hippo detection model.

The moment of truth

Because we had both the firmware and the model, it was time for the moment of truth. Did we succeed in getting a microcontroller to successfully detect hippos?

To run our test, we got a new dataset of images that were captured in the national park where the first cameras would be deployed. I started up our python testing scripts and ran the images through the Grove. The feeling I got when I saw the first real detection results come back successfully was nothing short of euphoric. The detections on that real life dataset were 99,16% accurate. After weeks of reverse engineering, piecing together documentation and actually learning embedded C, I had successfully reached the finish line. I had been able to answer the question “Could we use the Grove to make our cameras smart” with a resounding YES!

By now we had also brought an electrical engineer onto the project, Kevin, who would focus on integrating the Grove into one of our new camera solutions. So I handed off my prototype firmware, Python test scripts and documentation to him. He tried to set up communications with the Grove over SPI, because it is a lot faster than sending an image over serial. But after many frustrating hours, he came to the conclusion that serial was the way to go, as SPI was incredibly poorly documented and implemented on the Grove. After some work, he was able to optimise our serial image transfer and turn my crude communications standard into a more proper one. These improvements meant that the image transfer was fast enough to get the results back in under one second.

My takeaways

Now that we had the Grove up and running, and had put in a bulk order for them to use in our cameras, it was time to look back. To me, there are some things that stood out in this process of just diving in and making it work.

Breaking it down

As I mentioned earlier, this project was kind of like assembling Ikea furniture without the manual. You have no idea what you’re doing, everything is confusing and you have to figure out how to use tools you didn’t even know existed. What helps in projects like these is breaking down the seemingly monumental task into smaller chunks and working on them one at a time. The way I did that was by keeping a paper notepad and a pen on my desk at all times. Whenever I’d get stuck, I’d step away from my computer and start writing down, on the notepad, what the step by step short-term process could look like to get to my next goal or sub-goal. I’m normally all for note taking apps etc. but having to write on paper forced me to slow down and focus on my train of thought. It also took me out of my digital context for a bit, which helps a lot when dealing with tunnelvision.

Bugging people

Now, I’d like to think of myself as a friendly, not-annoying person - although some co-workers might disagree with that statement. 😉 But there are times when you need to step over your worry of how others might perceive you to get what you need and be annoyingly persistent. One such case was when I needed some information about the Grove. Reverse engineering to get my questions answered would take a lot of time. So I decided to reach out to Seeed Studio’s customer service to get the information I needed. Initially they just pointed me to one of the repositories that I already knew about. Now, it might have felt a bit rude to ask the same question again, but I did not have a satisfactory answer yet. So I kept pressing. Explaining our use case for the Grove and consistently asking them (politely) to provide us with example firmware. All this pushing and prodding eventually made it so that Seeed Studio decided to put me in contact with one of the engineers who had contributed the original Grove firmware. He ended up being super helpful and provided us with a lot of valuable information and examples. We would not have gotten this if I did not keep pressing until I had the information I wanted.

Embedded C is surprisingly refreshing

Going into this project, I had virtually no relevant embedded development experience. I had written some Arduino code in my hobby projects, but this was definitely something else. Whilst at first this world of memory pointers and header files was mainly headache inducing, at a certain point I did start to grow fond of it. I’m not quite sure if it was my embedded C skills improving or just a case of Stockholm syndrome, but the codebase started feeling more homely.

Normally, with the higher level languages we tend to use at Q42, you get error messages when something goes wrong. With embedded C, you’re lucky if you get a compilation error. Otherwise, your microcontroller will just randomly crash, get stuck in a bootloop or, with full confidence, output garbage data because you messed up somewhere. I might have wasted three days on this project because I accidentally wrote a computer vision model to the wrong memory address without realising…

This environment with no guard rails forces you to keep your code as simple as possible and to really think, on a low level, how it needs to handle its data and resources. This felt surprisingly refreshing, it kept me sharp. I also could not fall back on any sort of AI assistance. Whilst AI did help me understand how to do certain things in C, and helped me flesh out some smaller bits of logic, trying to get it to implement any actual functionality proved to be a pointless exercise. Slowly but surely, I got better at writing embedded code.

Even though, at Q, we don’t do a lot of (embedded) C, the skills I gained in this project are definitely applicable in a broader scope. For example, (embedded) C requires you to be very meticulous when debugging, which is a skill that comes in handy in other projects too. It’s also a lot of fun to, once in a while, get the chance to step way outside of your comfort zone. Picking up a task where you have no idea how to complete it can be daunting. But it also provides a great opportunity to flex your creative problem solving muscles and pick up some new skills. And even though it caused me a lot of frustration, there is also just something really cool about seeing a little piece of physical hardware doing exactly what you want.

Now that I've gained these skills and experience with the Grove, I have to resist the newfound urge to implement AI computer vision in all sorts of hardware beyond just cameras. Although... how bad of an idea could an AI-powered toaster really be? 🤔